Generative AI models have gotten exponentially bigger and more capable in recent years, as they’ve been trained on more data with greater amounts of computational power. But while large language models (LLMs) — the type of model that powers chatbots like ChatGPT — can perform impressive feats, like passing complex legal exams, they still regularly make mistakes.

This problem, which limits a lot of use cases for GenAI, is one that London-based startup Stanhope AI is hoping to bypass with a new AI approach that it’s just raised £2.3m to develop.

The round was led by the UCL Technology Fund (Stanhope is a University College London spinout), with Creator Fund, MMC Ventures, Moonfire Ventures and Rockmount Capital also participating.

What is Stanhope AI building?

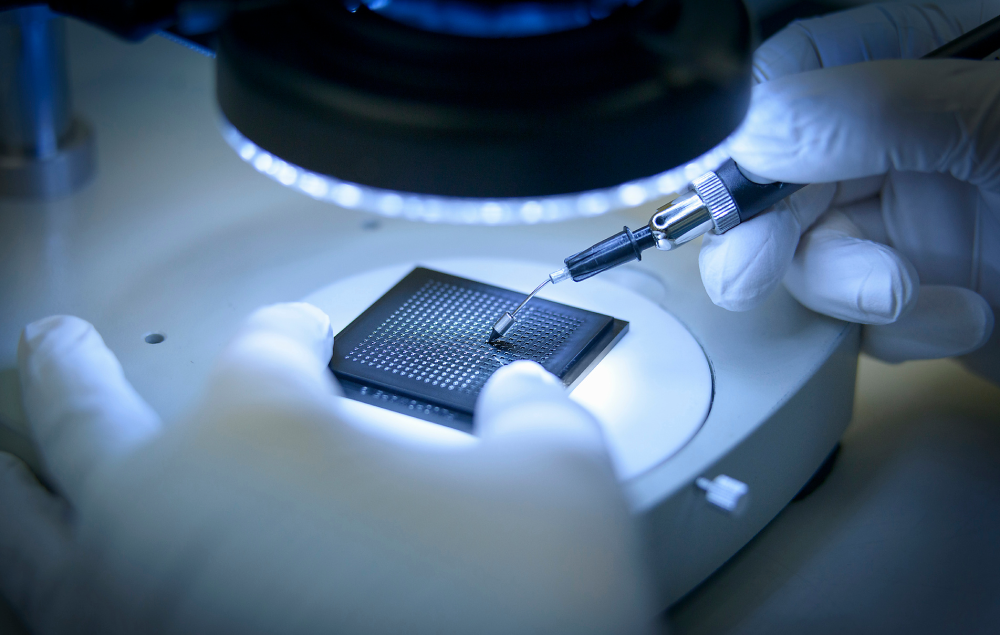

Stanhope is developing a new AI approach that’s designed to power machines that work in the real world, such as drones or warehouse robots. It’s calling its technology “agentic AI” — which describes AI that can act like a human does. It can be given a broad goal to complete, and then work out the steps required to achieve it.

Companies are already building AI agents with LLMs to automate job functions like junior sales reps, but these systems are still error-prone and are currently only suitable for lower-level roles, where some mistakes are acceptable on a business level.

Stanhope is building its technology from the ground up based on a principle from neuroscience called “active inference” — which describes how our brains are constantly learning on the go, based on sensory information around us, and self-correcting and improving to come to the best decision possible.

“If our AI isn’t able to make a prediction, its own internal metric says, ‘I'm not very good at this, I need to get more data, or I need to slow down, or I need to change my behaviour until I'm able to make a better prediction,’” explains Stanhope CEO Rosalyn Moran — an important factor if an intelligent system is going to be operating expensive machinery in a physical setting.

This represents a key difference from how GenAI models work: systems like ChatGPT are “pre-trained”, meaning that they operate based on huge amounts of data fed to them in their development at one given moment, rather than constantly taking in new data and adapting to it. They then provide an answer to a request by making a statistical prediction of what the best answer should be based on that data.

Stanhope says it’s been able to develop this brain-like, self-correcting AI by building a system that’s far more efficient in the way it processes data than GenAI models — using another theory from neuroscience called the “free energy principle.” This describes how our brains, for most of our day-to-day decision-making, aren’t actively using the vast majority of the info that we have available (e.g. when we leave the house, we don’t need to think about what a curb or a pavement is to safely cross the road).

“The idea is that we come to the world with this constructed model. So we have lots and lots of the work done already. So I don't need to take in absolutely everything in my environment, I have 90% of it in a model of the world, and I need to maybe update 10% of it,” says Moran.

She explains that Stanhope builds a “world model” for its AI systems. This is based on data and rules that describe and make sense of the space in which a machine might operate — such as a warehouse — and codes in the core rules and parameters for a robot to operate in that space.

That means that when the system is assessing a decision, such as how to pick up a package, it’s focusing on the specifics of that task, with the world model taking care of the higher-level rules.

Getting to market

Moran says that Stanhope will primarily focus on selling its AI software to companies using robots in industry, construction and delivery, but it won its first contracts in the defence sector.

The company was spun out of UCL in 2021, when it won a contract with the British Navy to fund research into active inference.

“[The navy] were interested in deeptech ideas around autonomy, and they also wanted to work with the US Department of Defence, and lots of people had heard about this fringe active inference thing, so that was a big catalyst that brought the company together,” says Moran.

She adds that, as a UCL spinout, it’s written in Stanhope’s charter that it can’t work on technology with offensive weaponry. She also says the US military has been “a driver of innovative research in a way that people don't quite appreciate”.

“DARPA (the US’s Defense Advanced Research Projects Agency) funded the first neural network back in the 40s,” she says.

These defence contracts allowed Stanhope to prove that its active inference AI system was effective in a simulated, digital setting, and the fresh capital will now be used to prove that it works in the real world.

As it does that, it’ll develop proof-of-concept systems for the delivery, logistics and defence sectors, ahead of a Series A round to finance the company’s next phase of commercialisation.