A lot of very clever people are calling for a pause — or at least a slowdown — of the development of ever-more powerful AI models.

But the founder of one AI organisation — responsible for building the dataset behind one of the most well-known generative models, Stable Diffusion, which powers many of the products made by Stability AI — says the solution to bring powerful AI under control isn’t slowing down. He says we need an AI supercomputer to be financed and built by world governments.

“[Legislating to slow down] is a lost battle because models will get more powerful anyway,” says Christoph Schuhmann, founder of German AI non-profit LAION. “We should empower citizens, researchers and companies to keep pace with and mitigate risks.”

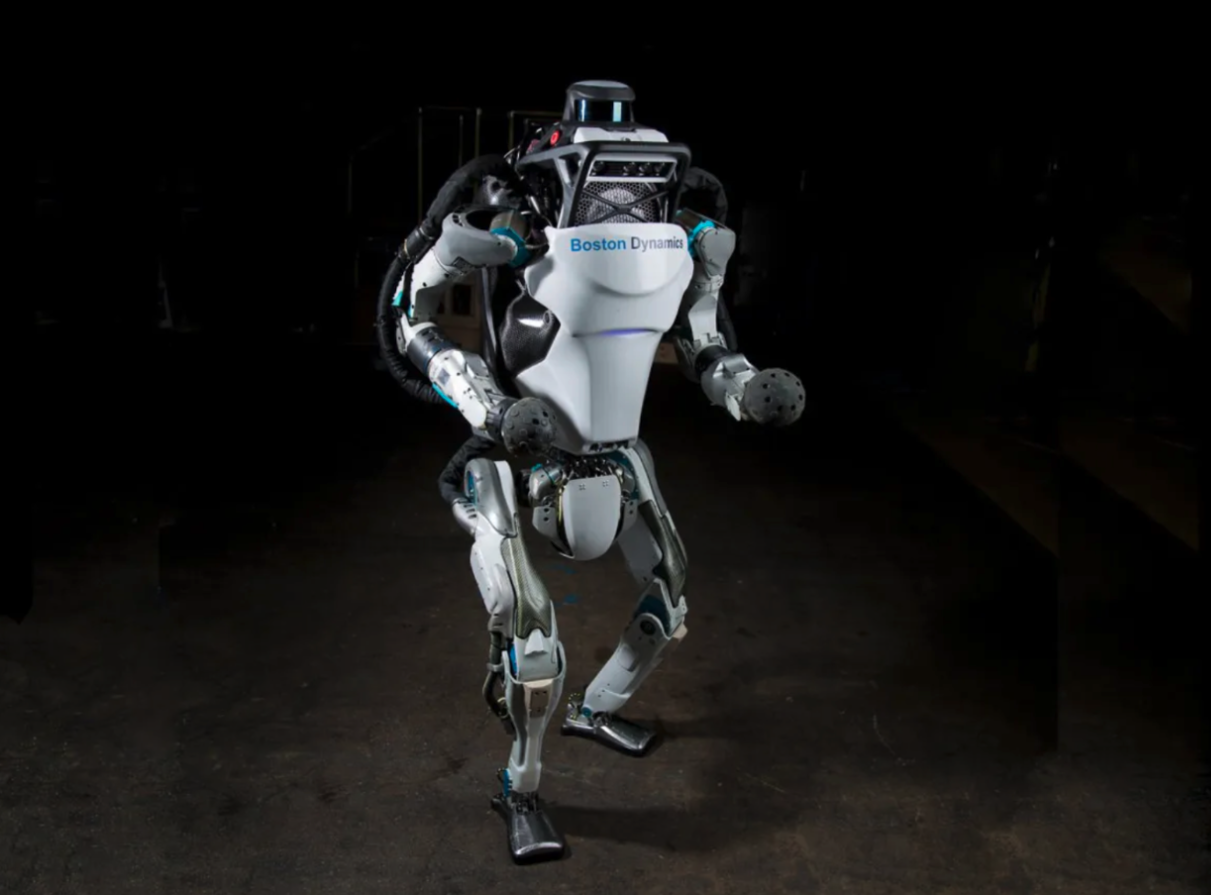

These risks, Schuhmann says, could come in the form of AI that can “puppeteer” bipedal robots like the “Tesla Bot” or Atlas from Boston Dynamics and — while it might sound like science fiction — could be upon us within a decade.

Schuhmann is not alone in his call for publicly funded AI models.

UK government AI adviser Matt Clifford recently told the Sifted podcast that nations are looking at owning AI capabilities, rather than just accessing them. Meanwhile, another wing of the AI research community says that the concentration of power by big companies is a much greater and more urgent risk than AI taking over the world.

To build societal resilience to these kinds of risks, Schuhmann estimates that world governments would need to invest $1bn-5bn in an AI-optimised supercomputer, and would “need to act quickly” if it’s to be worthwhile. While those numbers might sound like a lot, consider that the US defence budget is over $800bn, and that Bavaria alone recently announced a €300m fund for quantum.

A publicly funded AI supercomputer

Schuhmann’s concerns about AI are less about the risk of an AI “breaking out”, and more about the disruptions caused by the widespread use of AI in workplaces. What’s more, we’ll have serious inequalities if powerful AI is dominated by big companies.

“How can we make sure that we don’t build these gigantic monopolies? How can we make sure that big tech companies can still keep doing business, while still allowing smaller businesses to benefit from these technologies without becoming completely dependent?”

This is where the publicly owned AI supercomputer comes in. Schuhmann says that the technology should be overseen by a “well-curated board of directors” representing AI professors, open-source researchers and representatives from the “mid-scale” business community. This group, he says, should be elected by politicians and scientists, but "without years of bureaucracy."

This group would be responsible for making quick decisions about who should get access to the compute power to do their research, as long as they pass “basic security checks”.

This option is preferable to governments simply trying to legislate a slowdown of AI development and enforcing checks of research, as both would be difficult to enforce, he says. Schuhmann argues that — whatever politicians decide — companies like OpenAI will keep doing research, even if they can’t publicly release it as a “new model”.

Sifted reached out to OpenAI for comment.

Weighing risks and benefits

But is a huge AI supercomputer simply analogous to trying to fight gun violence with more guns? Schuhmann says this misses the point, as AI models can also be used to generate huge benefits for society.

“AI models are absolutely not like weapons. Weapons are tools specifically designed for the purpose of causing damage,” he says. “AI models are super powerful tools, more like a magic wand.”

Schuhmann adds that the debate essentially comes down to your own view of human nature.

“If you have a really grim view of human nature then you would say, ‘There will be so many evil people using the magic wand to do harm’,” he says. “If you have like a brighter view of human nature, you would say, ‘Okay, some people may use a magic wand for bad stuff, but the majority of people they will use their magic wand to empower public security'.”

Schuhmann points to the example of alarmist warnings about the proliferation of computer viruses in the 90s as a precedent for this technological revolution: “We are not completely swamped with them because the majority of internet users have substantial interest in keeping the internet safe.”

Ultimately, Schuhmann says that policymakers need to seriously consider the costs of not building more powerful AI.

“If you had an AI that could cure cancer within five years and you decide not to release that, because you have like a 0.001% chance of causing a disaster, then the question is: how do you weigh the risks and the potential benefits?” he says. “This is something that I cannot decide alone. There has to be a democratic process.”