The GenAI hype machine has struck healthtech, coming up with potentially revolutionising ways to detect, diagnose, treat and prevent diseases. But regulation is coming: the EU’s AI Act, the first binding legislation on AI in the world, is in its final phase of negotiations — and companies worry it may stifle growth.

So what are the regulatory considerations to take into account when using AI in healthtech? And how should healthtechs be preparing for compliance?

👉 To listen to more experts discuss AI and its implications, check out Sifted Summit, our annual flagship event

A heavily regulated sector

The UK’s medical device regulation states that a medical device cannot be put on the market unless it has a UKCA or a CE marking. A CE marking shows that the medical device complies with the relevant EU regulations and that the manufacturer has checked that the product meets the EU’s health and safety requirements, whereas a UKCA marking is the UK equivalent.

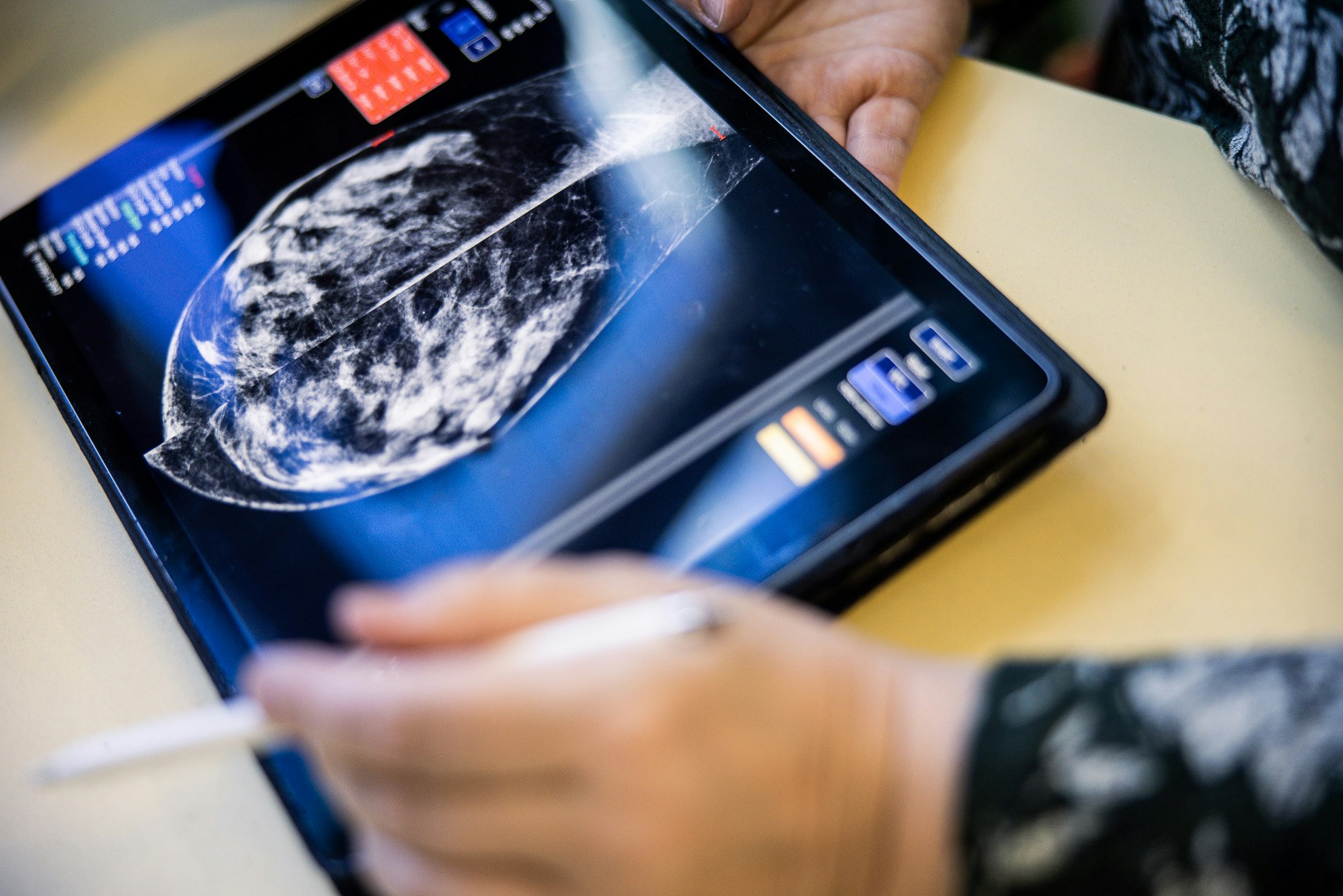

Dr Keith Grimes, a general practitioner and digital health consultant, says that “any use of AI that has a diagnostic or therapeutic function or is providing a clinical service will be considered a medical device, and therefore, impacted by regulation. For example, using an AI model to help assist with making diagnoses in the UK and in the EU — such decision support is a medical device, and therefore, will fall under the regulations there.”

The large-scale impact of products and services in healthcare make regulation a big part of the industry, which means that founders are familiar with changing regulatory requirements.

“There were several medical apps in the past that were not regulated but are now regulated under the new medical device regulations. The sector has undergone a massive upgrade from a directive system to a regulatory system, which enforces a lot more requirements,” says Pahini Pandya, founder of Panakeia, a healthtech that uses AI to provide faster and cheaper cancer diagnostics

“We already plan our product development, go-to-market strategy and fundraising strategies in alignment with the regulations that we're going to have to deal with.”

Lars Maaløe is cofounder of Corti, an AI-powered platform offering virtual assistants for medics. He says the upcoming AI regulation won’t be uncharted territory but healthtech companies and products that “have the biggest impact [on human lives] will be affected the most”.

How to be prepared

For Dr Grimes, given the nature of the industry, it's better to accept regulatory changes instead of fighting them. To be prepared, he says healthtechs could speak to external experts.

Pandya says that it’s also crucial to educate your shareholders and stakeholders — if you're a company that's raised capital — so they can understand the upcoming changes and how you're preparing.

“Be ready for a degree of uncertainty too,” she says. “Something we've implemented at Panakeia is also to incorporate compliance as a part of your culture — to incorporate quality, high standards and best practices that are safe, stakeholder-focused and trustworthy.”

Overcoming risks

The use of large language models (LLMs) in healthtech is ridden with risks such as data privacy issues, bias and hallucinations or model errors. Some experts say regulation could be key to overcoming these challenges.

Dr Grimes says that AI regulation, coupled with the enforcement of standards, could make sure that healthtechs integrate and use AI responsibly. He adds that being compliant could also be a competitive advantage for companies, helping them stand out in the market and potentially even open up new markets.

Pandya agrees, saying that Panakeia “leverages adhering to regulations and establishing novel best practices and guidelines to ensure patient safety, as a part of our competitive strategy”.

Mixed feelings

The tech industry has mixed feelings about AI regulation: that it's necessary but that it may also slow down innovation and scare away companies and investors.

Pandya says that the long waiting periods in the regulatory process could be a challenge for implementation when it comes to AI. “If we take example of CE marks, it still requires an external notified body to audit your technical documentation, and the process takes around nine to 18 months — with AI, since it moves so much faster than traditional solutions in healthcare, this will become a big bottleneck very quickly.”

She adds that the impact the regulation could have on diversity, equity and inclusion is an area that isn’t discussed enough. “There's a big risk because regulations place higher barriers to entry. The higher capital requirements for bringing in new products will mean that only a limited number of founders, who have access to such capital, will be able to build products — and ultimately, that will also impact who benefits from these solutions.”

Despite the hurdles, Maaløe says the regulation of AI in healthtech is inevitable, and probably, for the better: “We’re in need of tech innovation in healthcare, so I hope this can ensure that we don't stop innovation — but also that we get the right innovation out there.”

Corti’s Lars Maaløe and a host of other AI experts, including the founders of Sana and Proximie, and investors from OTB Ventures and RTP Global, will be speaking at Sifted Summit, our annual flagship event which lets you connect with the startup ecosystem in person. Find more information, or get your tickets, here.