The past 12 months have represented a year of collective AI hysteria, and while 2024 is likely to see some players accelerate even more, it will also see some car crashes.

This year has been one of big models — with major advances like the release of OpenAI’s GPT-4 and Google DeepMind’s Gemini demonstrating the brute force results of pouring ever more data and computing power (aka “compute”) into new AI models.

It’s also been a year where Europe has — largely — watched from the sidelines, as Silicon Valley startups have secured big funding commitments from corporates like Amazon and Microsoft.

But 2024 could be when AI models for business use cases get smaller — and less reliant on the huge amounts of data required for general purpose.

That’s one recurring theme from Sifted’s AI predictions for 2024, featuring the views of some of Europe’s top AI founders and investors.

They’re also predicting a big boom in autonomous “agents,” shifts in the debate on AI safety and at least one casualty among Europe’s large language model (LLM) companies.

Here’s what’s in store for 2024.

Amelia Armour, partner at Amadeus Capital Partners

More AI at the edge

Emerging high-performance but smaller AI models, which now require minimal compute power, will be deployed at a larger rate at the edge of the network over the coming year (on physical devices, for instance). This will speed up the acceleration of industrial automation and increase productivity, for example in autonomous robots in distribution centres.

Supercharged data centres

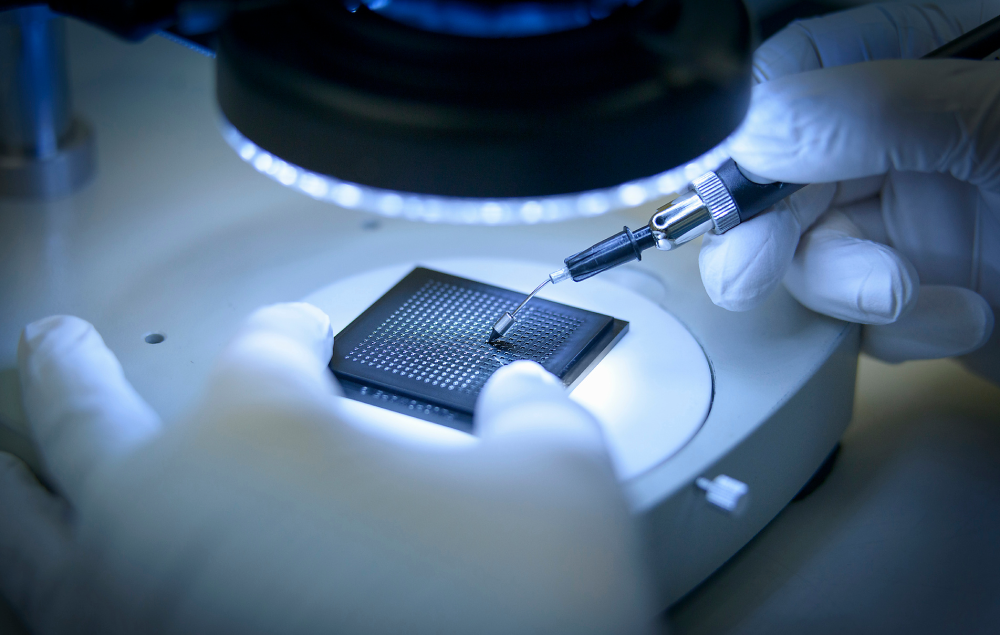

We can expect data centres to focus on network speeds to keep pace with increasing levels of AI compute whilst controlling their energy requirements and associated heat generation. Hardware innovation around the use of light for high-speed movement of data on energy-efficient photonic chips and more efficient cooling approaches are areas to watch for growth next year.

Peter Sarlin, CEO and cofounder of Finnish AI startup Silo

Open source, efficient models for the win in enterprise

Most companies will come to the conclusion that smaller, cheaper, more aligned and more specialised models make more sense for a clear majority of LLM use cases — and open source models will empower these.

AI and software become one

Most usage of and value creation with LLMs will happen with models embedded into software products. This LLM adoption will happen through a wide range of existing vertical and horizontal software vendors. No artificial general intelligence (AGI) will be achieved, and instead, LLMs create new features into existing software products and focus will shift on the jobs that AI creates.

Nathan Benaich, founder of Air Street Capital

Deepfakes will play a role in elections

So far, the evidence that deepfakes have had an impact on politics has been weak. However, with rapidly advancing capabilities, we think there will be at least attempts to deploy them maliciously during the 2024 presidential election. Whether or not they really affect the result, I predict this will spur at least one major regulatory investigation.

New funding structures for compute

I’ve long predicted that we will need to find a more sustainable way of funding compute-intensive startups. High round pricing due to large financings and median dilutions being applied risk becoming the norm. We’ve already started to see later-stage companies securing debt finance with their graphics processing units (GPUs) as collateral. I believe that at least one forward-thinking financial institution will attempt to normalise this.

A cool down on the AI governance debate

I think the air is going to start draining out of the global governance conversation. While the UK AI Summit was a significant moment, I just don’t believe there’s real alignment between Western democracies and China beyond a surface-level opposition to Armageddon. I don’t foresee the follow-up meetings making the same splash or any concrete action by governments.

Rick Hao, principal at Speedinvest

AI safety will become an opportunity area for investment

AI safety has crossed into the Overton Window this year. As enterprises rush to adopt the latest capabilities, questions of transparency, trust and governance are becoming top of mind for more and more people. However, we predict that AI safety will continue to be underinvested in, when compared to capabilities. We are working on one bet in this space already and will do more in 2024.

The speed of AI innovation will only get faster, leading to more surprise breakthroughs

Everyone agrees that AI capabilities are advancing fast. However, we claim that the rate of this advancement is also speeding up and that the rate of change is faster than most people expect. The breakthrough in material science published by Google DeepMind is a perfect example of the kind of surprise that frontier AI breakthroughs continue to bring us. In 2024, we will be expecting more breakthroughs.

AI agents will come of age

We expect agency (the ability for AI to carry out actions autonomously, rather than just answer prompts) to remain a hot topic particularly when it comes to products. The more agentic a tool is, the more economically useful it is. We expect this trend to continue in 2024.

Dmitry Galperin, general partner at Runa Capital

Multi-modal AI will make big noise

In 2024, the big thing in GenAI will be multi-modal models, which can handle different types of content like sounds, text, pictures and videos — all in one user experience. While these advancements will captivate the general public, the adoption of this technology in enterprise settings may encounter challenges related to issues like hallucinations, ethical concerns and security.

AI will have to get more trustworthy

The increasing significance of AI observability, data quality assurance and protection will become pivotal factors in fostering the widespread adoption of AI. The substantial volume of data and parameters involved in AI training is likely to drive the adoption of novel computing technologies, ranging from photonics interconnects that eliminate data transfer bottlenecks inherent in classical Von Neumann architecture to the exploration of alternative architectures such as neuromorphic and analogue computing.

Vanessa Cann, CEO and cofounder of German GenAI startup Nyonic

More specialised models

Current LLMs are limiting business use cases. They are too generic — essentially at the level of a high school graduate. In 2024, we will see more specialised models, trained on industry knowledge and jargon and tailored to different industry verticals and tasks that will enable companies to get much better results and pave the way for more complex use cases. This will become the basis of the technological transformation of our economy and workforce.

Companies using AI will pull ahead

Companies that deploy foundation models have a clear competitive advantage. With the immense increase in the power of foundation models that we will continue to see in 2024, companies can significantly improve their productivity, efficiency and speed of innovation — depending on the industry, recent studies predict improvements of up to 70% (McKinsey). It's been a long time since technology had such a profound impact on how businesses work.

Rasmus Rothe, cofounder of Merantix

The environmental impact of AI will become a dominant topic

AI’s impact on the environment has frankly been one of the most overlooked topics during the 2023 boom. But I predict that will no longer hold. The “transformers” that underpin AI models consume a tonne of energy to train and use. In 2024, as these systems become more ingrained in our economy, the explosion of the AI world will stand in tension with societal concerns around climate change and energy consumption. That will put economic and political pressure on AI systems to create better model architecture — meaning the ability to train and use AI models with less data and energy. We need better efficiency not just for environmental impact, but also to drive down costs for customers.

A major LLM will go out of business or be subsumed in some kind of firesale

I’m not sure who, but some buzzy company will die under their own compute costs, incorrect revenue forecasts or monetisation models, or a crunch in demand because of the growing success of competitors that perform better in specific niches. “Open source” AI will become stronger, with existing models already strong enough for many applications and use cases. The fact is there is immense competition in the LLM space from the big tech incumbents with deep pockets, better distribution and more computing power. I expect that some very hyped LLMs may cease to exist next year, or be subsumed for a fraction of their current valuation by others.